HIPAA compliance often takes a backseat for medical startups. Competition is high, funding scarce, and deadlines merciless. When resources are limited, upfront costs seem like a huge burden. Such thinking is pervasive among business stakeholders.

However, HIPAA compliance is a must for startups dealing with sensitive health information or serve covered entities like hospitals or health insurance companies.

Fines of up to $50,000 are a well-known risk. What business people often don’t realize is that late compliance changes might require a LOT of extra work.

Take, for example, a recent project I worked on as a Lead DevOps engineer. Early on, the startup focused on reducing time to market and validating the idea of AI-powered drug testing. The MVP created by our healthcare software development company followed all basic security requirements, like encryption.

When it became clear the product needed additional certification for the US market, it already had 356 cloud resources described in Terraform. At that point, complying with HIPAA required 200+ hours of work for a Senior DevOps engineer.

Large changes also require extra testing and QA expenses. So, I highly recommend thinking about compliance as early as possible.

And to make the task easier for your team, I made a step-by-step guide on HIPAA compliance for startups using native AWS capabilities and infrastructure as code security.

Build a secure base for your project

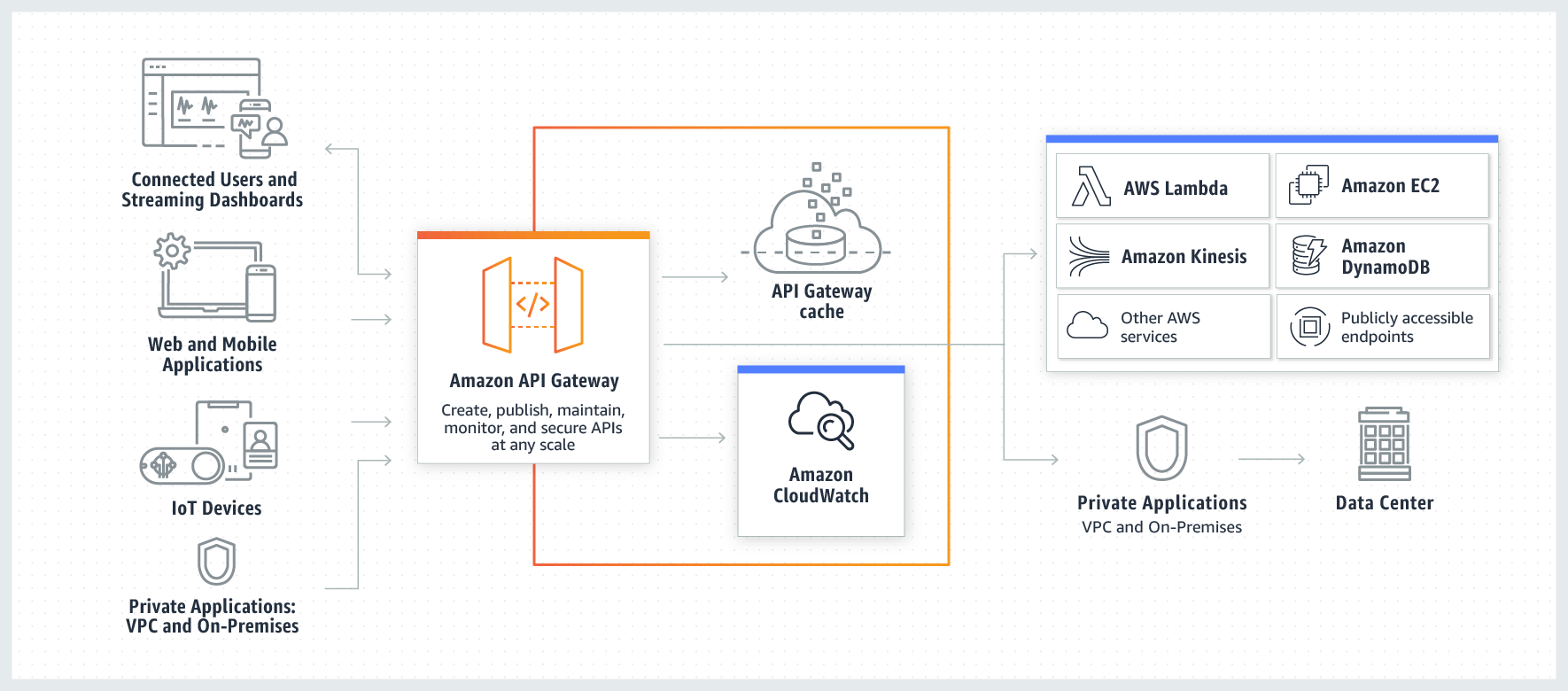

A good place to start is to look at Amazon’s best practices for serverless architecture. Here’s the list of AWA HIPAA best practices that formed the base for our MVP:

- Data encryption at rest and in transit with AWS Key Management Service (KMS) keys.

- Minimum necessary permissions for all roles and service policies.

- Separate public and private networks with appropriate security groups.

- Cost monitoring for all resources.

- Limits on the number of requests and parallel runs of Lambda functions.

- Alerts according to our budgets to prevent unexpectedly large invoices.

- CloudTrail, AWS Backups for the project database, and S3 buckets.

- System monitoring and alerting with Datadog and CloudWatch.

Set up your Amazon management account

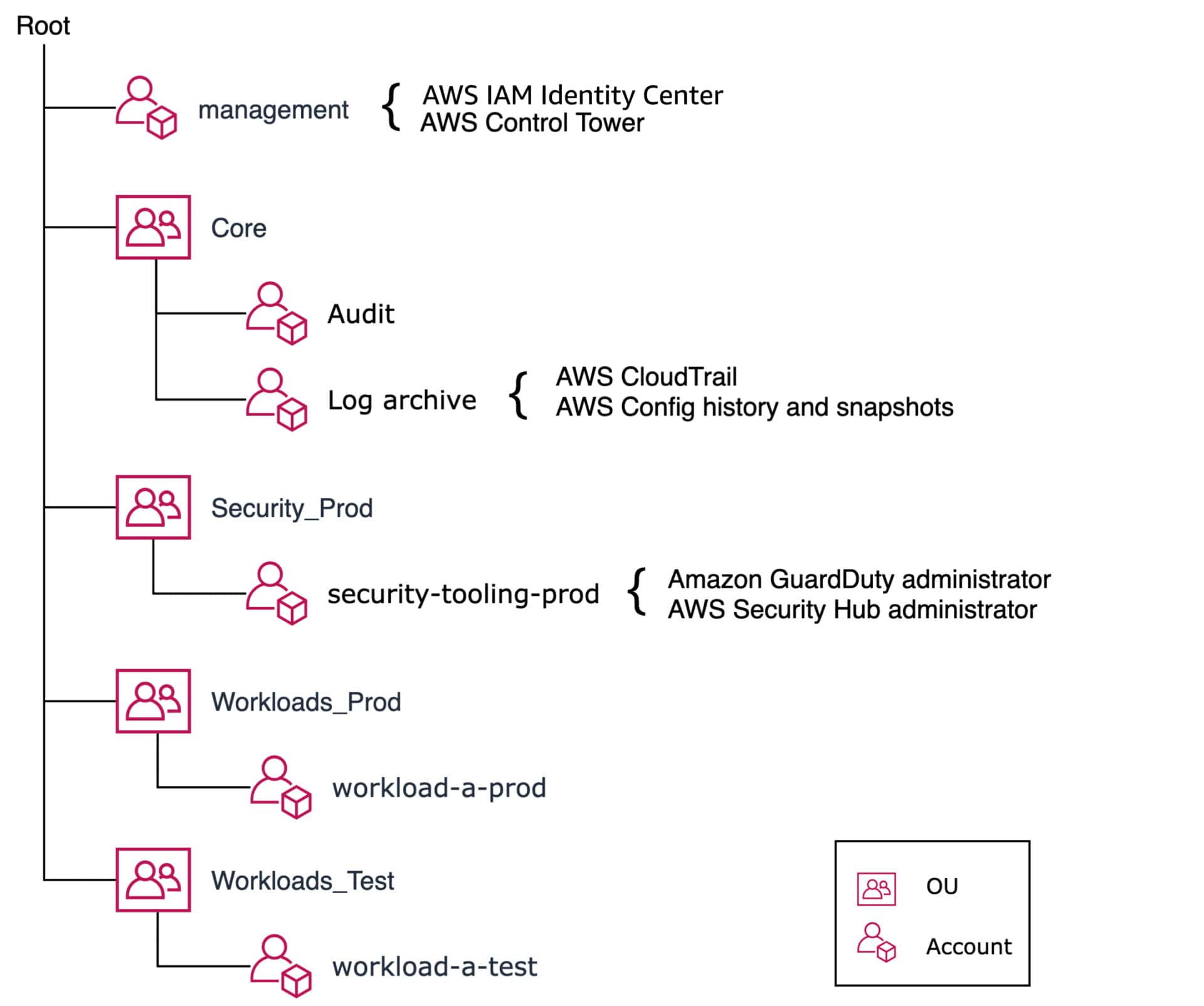

We recommend setting up a management account for your organization’s AWS Managed Services to start on the road to HIPAA compliance. Later, you can add different user roles and limit access using AWS IAM Identity Center permission sets.

Creating all environments in one account is a common mistake on large projects. Making global changes without the risk of breaking something in the product environment is challenging. Managing access rights is a nightmare if you do it this way.

A better solution is to create separate subaccounts for each environment.

This multi-account setup allows for the isolation of cloud resources and application data. By default, AWS blocks any access between accounts. You will have different teams with different security permissions and compliance controls. AWS provides clear security boundaries, controls for limits and throttling, as well as separation of billing.

Depending on the project, dozens or even hundreds of accounts may be required, a difficult task without automation.

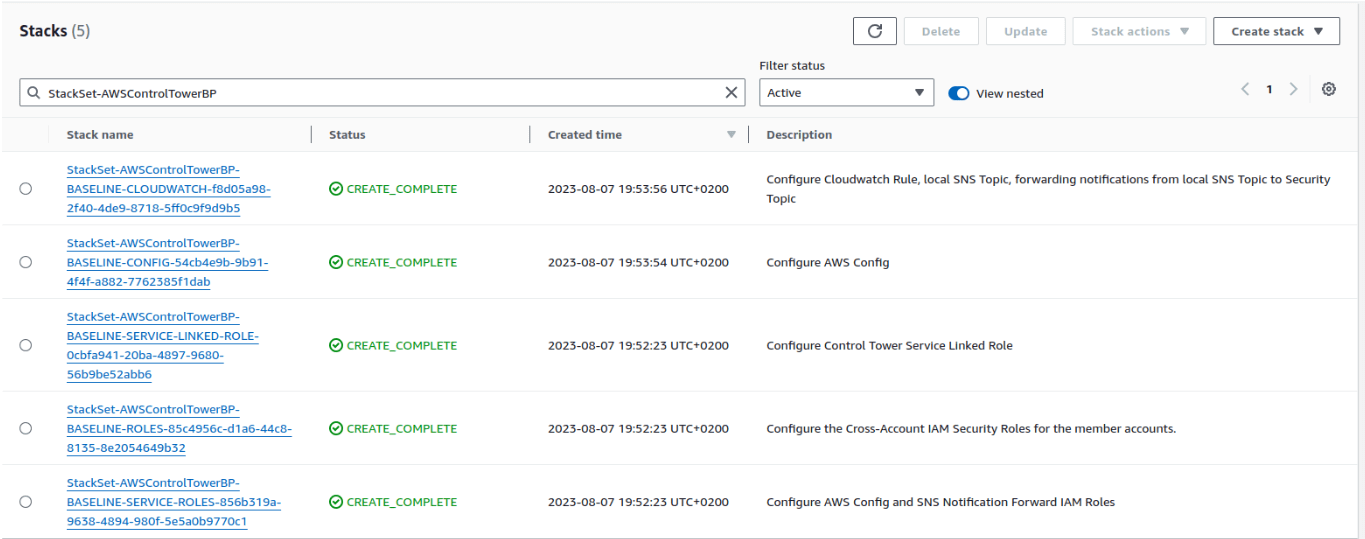

AWS Control Tower allows immediate organization of secure, simple, and correct access to other accounts within your environments.

At this point, it makes sense to set up a centralized AWS Config and AWS Security Hub in your management account. They aggregate data and manage service settings in your subaccounts. Remember to double-check the service settings separately in each of these subaccounts.

Basic multi-account environments setup using AWS Control Tower for supporting workloads in prod

Source: Organizing Your AWS Environment Using Multiple Accounts

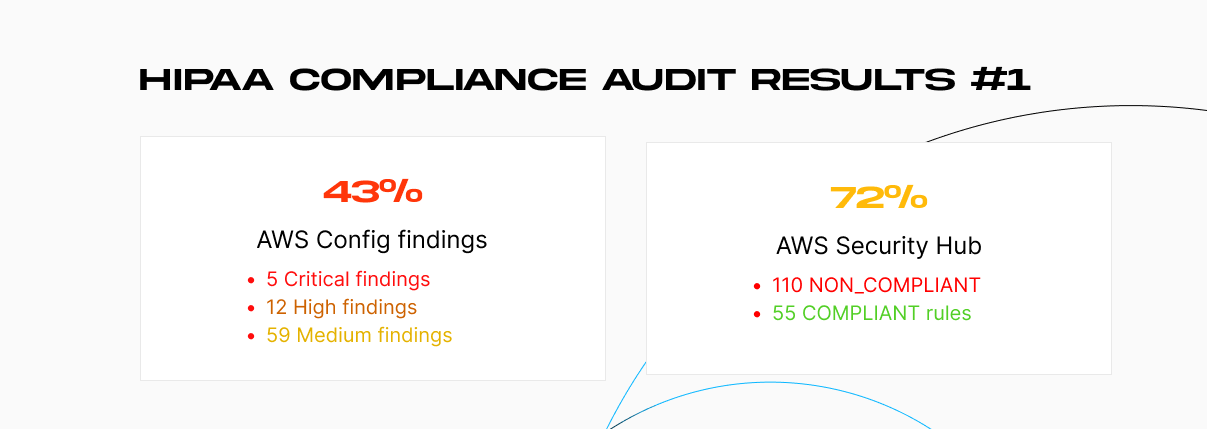

Run the first AWS Config/Security Hub audit

There are many tools that allow you to scan infrastructure and get HIPAA compliance recommendations. As a rule, they all require expensive subscriptions.

In contrast, AWS Config and AWS Security Hub require little additional costs. They are already integrated into the provider’s infrastructure. They have a ton of positive reviews from engineers.

Starting is as simple as pressing a few buttons in the AWS console. Wait for about a day as Amazon scans the environments and collects the necessary data. These services provide detailed recommendations together with a total security & compliance score.

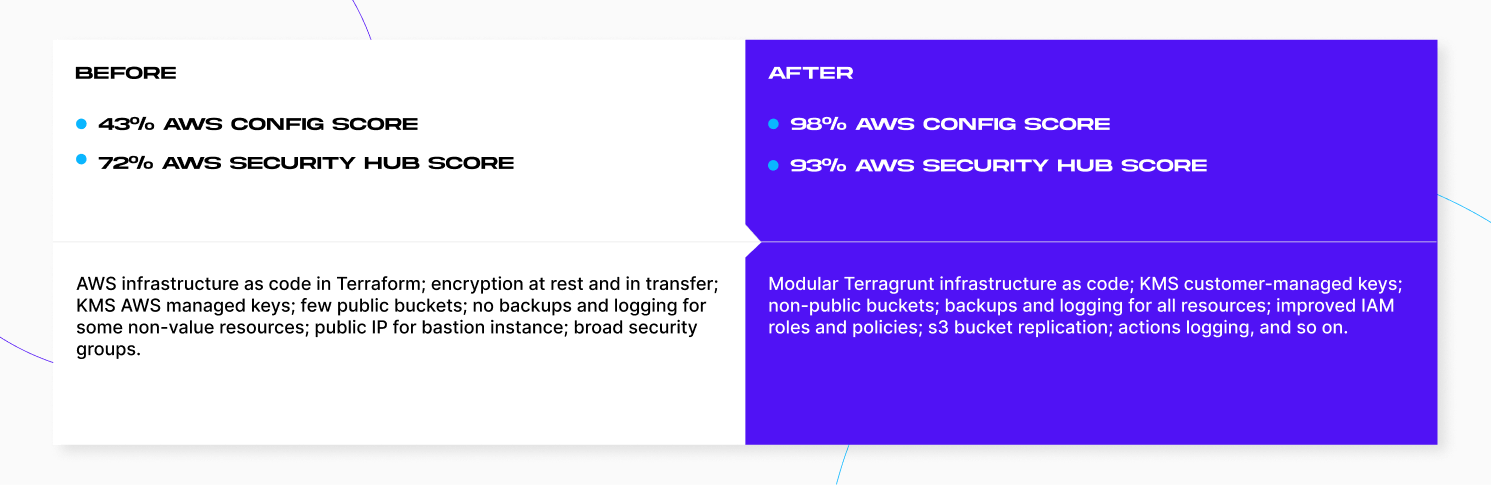

Our first run showed a troubling reality:

- 43% AWS Config score (110 NON_COMPLIANT rules, 55 COMPLIANT rules).

- 72% AWS Security Hub score (Findings: 5 Critical, 12 High, 59 Medium).

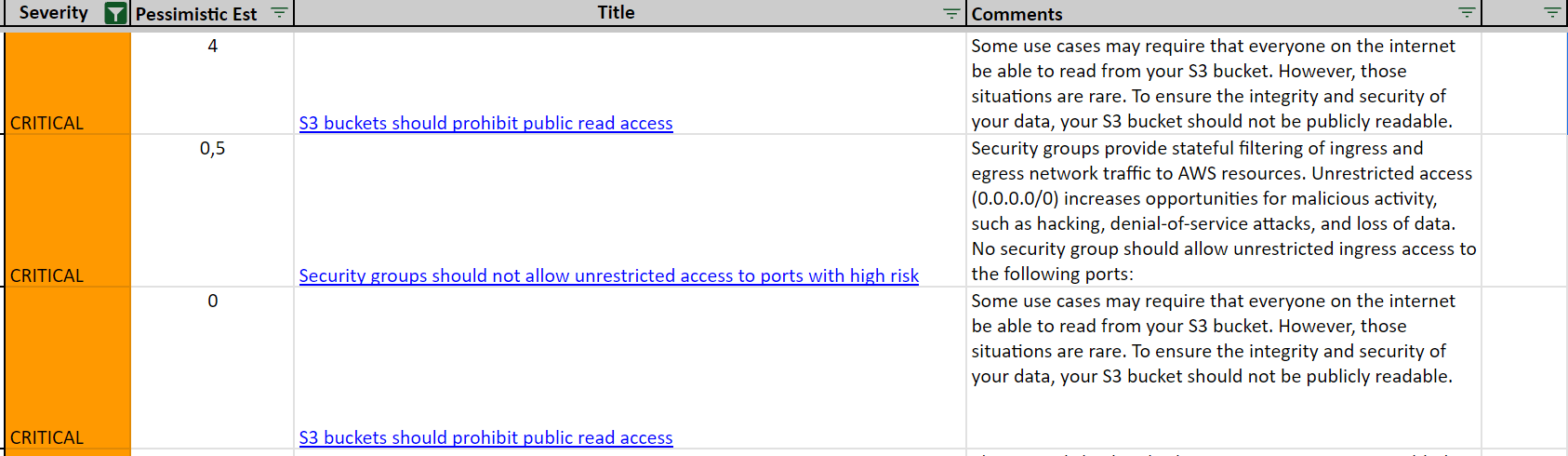

Unfortunately, AWS Config doesn’t let you export the findings. Even Security Hub lacks all the essential fields, including:

- Severity.

- Optimistic estimate.

- Pessimistic estimate.

- Comments.

- Title.

- Resource.

- ComplianceStatus.

- Description.

- Recommendation.

- RemediationURL.

To solve this issue, we created a small script Using the boto3 library. You can use it to download all NON_COMPLIANT rules to a single CSV file.

AWS Config code:

#!/usr/bin/env python3

import os

import json

import logging

import sys

from io import StringIO

import boto3

from botocore.exceptions import ClientError

import pandas as pd

logger = logging.getLogger(__name__)

class Config:

"""

Encapsulates AWS Config functions.

"""

def __init__(self, config_client):

"""

:param config_client: A Boto3 AWS Config client.

"""

self.config_client = config_client

def get_conformance_pack_compliance_details(self, pack_name):

try:

response = self.config_client.get_conformance_pack_compliance_details(

ConformancePackName=pack_name,

Filters={

'ComplianceType': 'NON_COMPLIANT',

}

)

results = response["ConformancePackRuleEvaluationResults"]

while "NextToken" in response:

response = self.config_client.get_conformance_pack_compliance_details(

ConformancePackName=pack_name,

Filters={

'ComplianceType': 'NON_COMPLIANT',

},

NextToken=response["NextToken"]

)

results.extend(response["ConformancePackRuleEvaluationResults"])

def format_item(item):

try:

result = {

'ConfigRuleName': item['EvaluationResultIdentifier']['EvaluationResultQualifier']['ConfigRuleName'],

'ResourceId': item['EvaluationResultIdentifier']['EvaluationResultQualifier']['ResourceId'],

'ResourceType': item['EvaluationResultIdentifier']['EvaluationResultQualifier']['ResourceType'],

'Annotation': item.get('Annotation'),

}

except:

logger.exception("Can't format: %s", item)

raise

else:

return result

result = [format_item(item) for item in results]

logger.info("Got ConfigRules")

except ClientError:

logger.exception("Couldn't get ConfigRules")

raise

else:

return result

def main():

print('-' * 88)

print("Welcome to the AWS Security Hub export tool!")

print('-' * 88)

logging.basicConfig(level=logging.INFO, format='%(levelname)s: %(message)s')

client = Config(boto3.client('config'))

try:

pack_name = sys.argv[1]

except IndexError:

print(f"Please set pack name as the first argument like: {sys.argv[0]} <pack_name>")

exit(1)

print(f"Get AWS Config compliance non-compliant list for '{pack_name}'...")

df = pd.read_json(StringIO(json.dumps(client.get_conformance_pack_compliance_details(pack_name), default=str)))

try:

os.mkdir("results")

except FileExistsError:

pass

df.to_excel('results/aws_config_non_compliant.xlsx')

print("Done!")

print('-' * 88)

if __name__ == '__main__':

main()

AWS Security Hub code:

#!/usr/bin/env python3

import json

import logging

import boto3

from botocore.exceptions import ClientError

from io import StringIO

import pandas as pd

logger = logging.getLogger(__name__)

class SecurityHub:

"""

Encapsulates AWS Config functions.

"""

def __init__(self, config_client):

"""

:param config_client: A Boto3 AWS Config client.

"""

self.config_client = config_client

def get_findings(self):

try:

Filters = {

'WorkflowStatus': [

{

'Value': 'NEW',

'Comparison': 'EQUALS'

},

{

'Value': 'NOTIFIED',

'Comparison': 'EQUALS'

}

],

'RecordState': [

{

'Value': 'ACTIVE',

'Comparison': 'EQUALS'

}

]

}

paginator = self.config_client.get_paginator('get_findings')

page_iterator = paginator.paginate(Filters=Filters)

findings = []

for page in page_iterator:

findings.extend(page["Findings"])

def format_item(item):

try:

result = {

'Severity': item['Severity']['Label'],

'Title': item['Title'],

'Resource': [resource['Id'] for resource in item['Resources']],

'ComplianceStatus': item.get('Compliance',{}).get('Status'),

'Description': item['Description'],

'Recommendation': item.get('Remediation',{}).get('Recommendation',{}).get('Text'),

'RemediationURL': item.get('Remediation',{}).get('Recommendation',{}).get('Url'),

}

except:

logger.exception("Can't format: %s", item)

raise

else:

return result

result = [format_item(item) for item in findings]

logger.info("Got findings")

except ClientError:

logger.exception("Couldn't get findings")

raise

else:

return result

def main():

print('-' * 88)

print("Welcome to the AWS Security Hub export tool!")

print('-' * 88)

logging.basicConfig(level=logging.INFO, format='%(levelname)s: %(message)s')

client = SecurityHub(boto3.client('securityhub'))

print(f"Export AWS Security Hub Findings...")

df = pd.read_json(StringIO(json.dumps(client.get_findings(), default=str)))

df.to_excel('results/security_hub_findings.xlsx')

print("Done!")

print('-' * 88)

if __name__ == '__main__':

main()

Prioritize quick wins

Each project is unique. Your specific findings will depend on the current infrastructure as code security and its state.

For example, after creating resources responsible for Networking, you’ll probably see noncompliance with the requirements in the higher Network ACLs and Security Groups. And after adding new EC2 instances, you’ll most likely see the need to back them up on schedule using AWS Backup, as well as add extra logging or encryption.

If you use S3 buckets, be sure to pay attention to new non-compliant rules immediately after adding the first bucket. This type of service has many compliance rules. Meeting some of them requires the creation of additional AWS resources. Those resources may, in turn, also not meet the requirements with the default settings.

And if you don’t yet rely on infrastructure as code, a great way to start is to check our guide on importing existing AWS resources to Terraform.

AWS Security Hub and AWS Config findings provide detailed recommendations for making a rule compliant.

You can, of course, deal with all NON_COMPLIANT rules one-by-one. However, in most cases this will take an eternity. It makes sense to go after quick wins instead.

These are the most security-critical issues that require the least amount of work to fix them. After all, auditors don’t care about the team’s effort. They need you to close “gaps” in order to license the product.

For some rules, it’s enough to change an option in a Terraform resource, such as adding encryption or changing a cloud resource setting. Here are the examples:

Enable S3 notifications to Lambda functions, SQS queues, and SNS topics in Terraform.

Server-side encryption of S3 buckets with AWS KMS in Terraform.

SNS topic encryption with AWS KMS in Terraform.

Other rules require the creation of new Terraform resources and modules, or changes to the application architecture.

Let’s discuss these challenges and possible solutions next!

Deal with the toughest challenges

After deploying each part of the infrastructure, look at changes in the AWS HIPAA compliance score.

Many of the fixes below require significant additions or reworks. They become difficult with a larger and connected codebase. So, better tackle these problems before you have a Mount Doom worth of infrastructure code.

S3 buckets

Some of the most time-consuming fixes all had to do with S3 buckets. They include replication with encryption, event logging, access logging, and other bucket settings.

For example, replication and logging required creating additional buckets in a separate account. These buckets used their own KMS customer managed keys. So we had to configure extra KMS key policies.

One compliance requirement was that KMS key permissions be added to the KMS policy itself, not to the IAM policy. The problem was that we already used the terraform-aws-s3-bucket module. Its input parameter logic was incompatible with the necessary compliance changes. We had to refactor a huge chunk of infrastructure code.

What’s more, you must send S3 bucket events to SNS topics with separate roles and policies. For these reasons, our Terraform state began to increase rapidly. Code readability suffered, requiring a fix I’ll detail later.

This harks back to my main argument. Learning how to be HIPAA-compliant early frees you from expensive consequences down the road.

Backups

Another time-consuming change is setting up the AWS Backup service.

Some compliance rules require appropriate backup plans in the AWS Backups service. This involves describing the backup and retention rules for all data resources, including EC2 instances, RDS databases, and DynamoDB tables. You’ll also likely want to back up S3 buckets or EFS file systems.

To function correctly, these backup jobs require describing all the necessary resources. For this, you need to describe additional KMS keys and policies for them. Another requirement is to describe the corresponding IAM roles together with the resource permissions. There’s also the matter of backup schedules, their frequency, rotation, moving the data between different types of storage to reduce costs, and so on.

All of this requires a lot of effort. However, backups are absolutely essential — both for the product’s data and the extra AWS resources needed to raise your AWS HIPAA compliance score.

KMS customer-managed keys

HIPAA compliance requires using customer-managed KMS keys. This allows you to set up narrow policies that limit access for specific roles and services for specific operations using the keys.

However, switching to customer-managed keys will break a lot of things that work out of the box with AWS-managed keys.

AWS Security Hub requires you to use the KMS key policy instead of working with KMS keys directly on IAM roles or users. Without accounting for this requirement when starting the infrastructure coding, you’ll need a lot of extra work to raise the Security Score.

Our project had been switched from AWS-managed to customer-managed keys, so we had to redo service role policies. We then added the corresponding policies to all keys. Cross-account usage of keys for S3 bucket replication is another non-trivial task.

These fixes affect security and HIPAA compliance scores. What’s more, narrower KMS key policies give more control over security. It’s a win-win situation as long as you don’t put these fixes on the back burner.

Public access

Before the AWS Security Hub/Config audit, we had a typical EC2 setup for our bastion instance. It had SSH keys and a public IP address with a whitelist of IPs used for the company’s VPN servers. Using a dedicated VPN gateway directly on the project is a far better option. However, the gateway would still have a public IP address or an additional tunnel to the back office.

So, how can you connect to internal resources without having any public access points using the available AWS tools?

Our solution was to move the bastion server to a private network using the Port Forwarding and Session Manager.

The project used the serverless approach, so the team had limited access to the AWS account. The ability to create a tunnel to access the database is also regulated using the IAM policy specified in the team roles.

This way, we don’t have to create additional resources for the VPN server and VPN user management. We just reuse the existing resources in the AWS IAM Identity Center (identity store, user groups, permission sets).

tunnel.sh

#!/usr/bin/env bash

BASTION_ID=$(aws-vault exec ${AWS_PROFILE} -- aws --region ${AWS_REGION} ec2 describe-instances --filters Name=tag:Name,Values=${OBJECTS_PREFIX}-bastion Name=instance-state-name,Values=running --query "Reservations[*].Instances[*].[InstanceId]" --output text)

aws-vault exec ${AWS_PROFILE} -- aws --region ${AWS_REGION} ssm start-session --target ${BASTION_ID} --document-name AWS-StartPortForwardingSessionToRemoteHost --parameters host="${DB_HOST:=database.db.${AWS_REGION}.compute.internal}",portNumber="${DB_REMOTE_PORT:=5432}",localPortNumber="${DB_LOCAL_PORT:=5432}"

dev.sh

#!/usr/bin/env bash OBJECTS_PREFIX="project-dev-<rand_id>" AWS_REGION="us-east-1" AWS_PROFILE="project-dev" DB_LOCAL_PORT="15435" DB_REMOTE_PORT="15432" source ./tunnel.sh

Security groups

Processing security group rules while ensuring the system remains functional is easy if you have few services and a properly implemented VPC.

The situation is far more challenging post-release or during active EHR development. Any drastic amendments to security groups are especially problematic if you already have infrastructure as code. Some of the necessary fixes may require changing the architecture or internal resource access.

So, pay attention to NON_COMPLIANT rules and high/critical Security Hub findings related to security groups and networking in general. The best time to do this is immediately after describing the VPC and before filling it with services.

Another challenge comes from security requirements regarding network configuration (verification rules in the aforementioned services) may be stricter than you expected when building the project foundation. It’s therefore better to pay attention to any inconsistencies when building this “foundation” so that you don’t have to smash down “walls” later on.

Execution and access logs

You’ve described all the resources needed for the project to function. You’ve already implemented the collection and visualization of logs. The job is done, right?

Well, I have bad news. The rules for checking the services mentioned above have additional requirements:

- Adding access logs for your API Gateway, a CloudWatch group, and an IAM role with the corresponding policy.

- Configuring CloudFront access logs, VPC flow logs, and Elastic Load Balancing logs at minimum.

- Collecting these logs in S3 buckets in a separate account.

- Describing your cross-account S3 bucket and KMS access policies.

- Creating customer-managed KMS keys in both accounts.

To comply with security requirements, you’ll also need to set up access logs for all your S3 buckets. However, there is no way to write them to another account immediately. You’ll need to add an extra bucket to your current account and set up replication to the log account. This will also entail the creation of KMS keys and additional policies.

Implementing these tasks alone may take several weeks. You might also need to add extra cloud resources and configure their interactions to meet the security requirements.

Be careful when estimating and planning the work.

A growing number of Terraform State resources

Fixing AWS Config and Security Hub findings is often a chicken-and-egg kind of problem.

You add new resources. Those new resources don’t comply with the requirements. So, you have to change things and add new resources.

Our Terraform State, for example, grew from 356 to 900+ resources. Of course, not all of them relate to compliance as code and security. However, it’s safe to say that complying with HIPAA will roughly double the complexity and size of your infrastructure code.

With such a large amount of resources in one State, you have another problem. The execution time of our ‘Terraform plan’ and ‘Terraform apply’ runs over 7 minutes. It used all Intel Core I7 cores at 100%, which is not typical for Terraform.

Making a tiny syntactic error leads to a failed run. So after fixing the error, we had to wait another 7 minutes to see the result. This wasted a ton of development time, making it horribly inefficient.

The team had to refactor the code, breaking it into several smaller states to solve this issue. We recommend following Terragrunt’s DRY approach to code, configuration, architecture.

With these changes, our infrastructure development became much easier. ‘Terraform plan’ is more predictable. We have fewer resources with the status “known after apply.” Instead of running the whole thing, it’s now possible to run a small fragment as an independent Terraform deployment.

This is a huge quality of life improvement on top of infrastructure as code compliance.

AWS Config, Security Hub, and Control Tower contradictions

Beware that AWS Config/Security Hub recommendations can sometimes be illogical.

For example, you are required to log S3 bucket requests. These logs must be added to another bucket in the same region and account. However, this second bucket can’t be encrypted with a customer-managed key, violating one requirement.

To solve these issues, you must add S3 bucket logs to Security Hub exceptions and project documentation.

The AWS Control Tower can be another source of contradictions. The service is great for central management of certain security, access, and monitoring rules.

Connecting your environment accounts creates CloudFormation resources and automatically executes them. This allows Control Tower to interact with the connected accounts.

However, resources created by these CloudFormation stacks don’t meet some of the compliance and security rules, decreasing your overall score. Even worse, they can’t be edited or deleted.

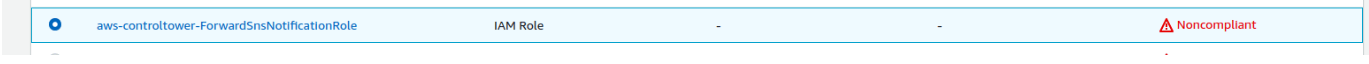

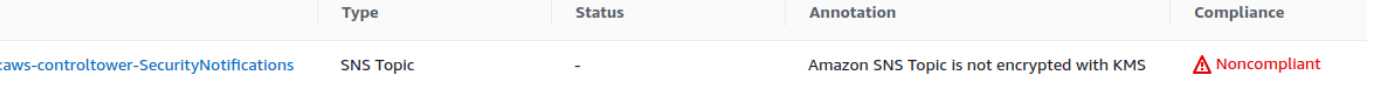

Example #1. The rule is NON_COMPLIANT if an AWS Identity and Access Management (IAM) user, role, or group has any inline policy.

Example #2. The rule is NON_COMPLIANT if an SNS topic is not encrypted with AWS KMS. Optionally, specify the key ARNs, the alias ARNs, the alias name, or the key IDs for the rule to check.

Maintain HIPAA compliance

You can solve even the most challenging problems by writing your infrastructure code with HIPAA compliance already in mind. Run AWS Config and Security Hub audits as early as possible. Implement the most critical findings. Make your healthcare software compliance a continuous, iterative process.

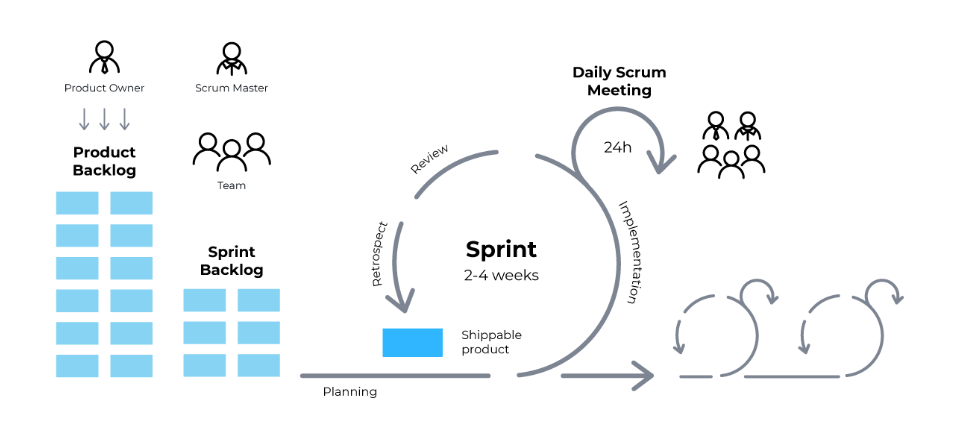

Plan your Sprints with HIPAA in mind

It’s a good idea to run follow-up compliance and security audits from time to time. Add any new AWS Config NON_COMPLIANT rules and Security Hub Findings to the backlog. Re-prioritizing them during each Sprint planning allows you to become HIPAA-compliant while keeping a high velocity.

Use the same Sprint planning process for any changes to existing resources or new additions to the product.

Audits reveal what needs to be changed. You can then add alerting for any critical findings and automatic corrections with Lambda functions.

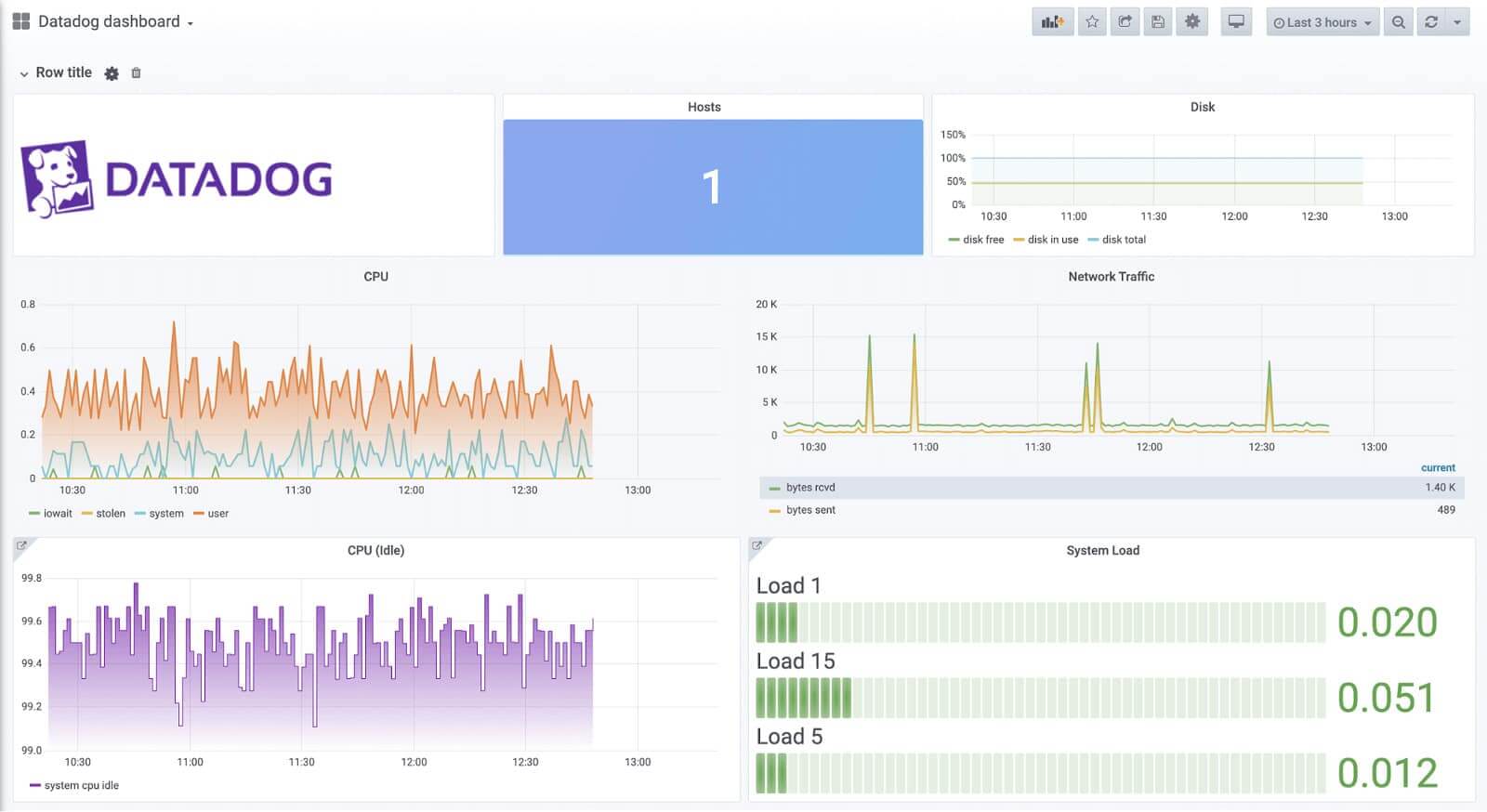

Integrate Datadog monitoring

Datadog is a powerful tool that monitors your environment, detects anomalies, and makes recommendations based on the data it receives. The best thing I like about Datadog is that it’s an all-in-one solution with good, structured documentation. It doesn’t just collect metrics, logs, events, and traces, but also correlates all these events with each other.

So, you can fully monitor the state and operation of individual services.

Datadog also monitors your cloud costs, provides recommendations, and tracks the cost dynamics in all resources. Its security services layer analyzes the data received and warns about attacks. It’s even cheaper than similar AWS services.

Datadog dashboard. Source: Grafana

Integrating your Datadog and AWS accounts is a common challenge. It requires creating or describing many objects, both in Datadog itself and your AWS environment:

- List of integrations and their configuration.

- Monitors for each environment.

- Dashboards for each environment.

- IAM roles.

- Lambda Forwarder.

- EventBridge rules.

- AWS resources tagging.

- CloudWatch subscriptions.

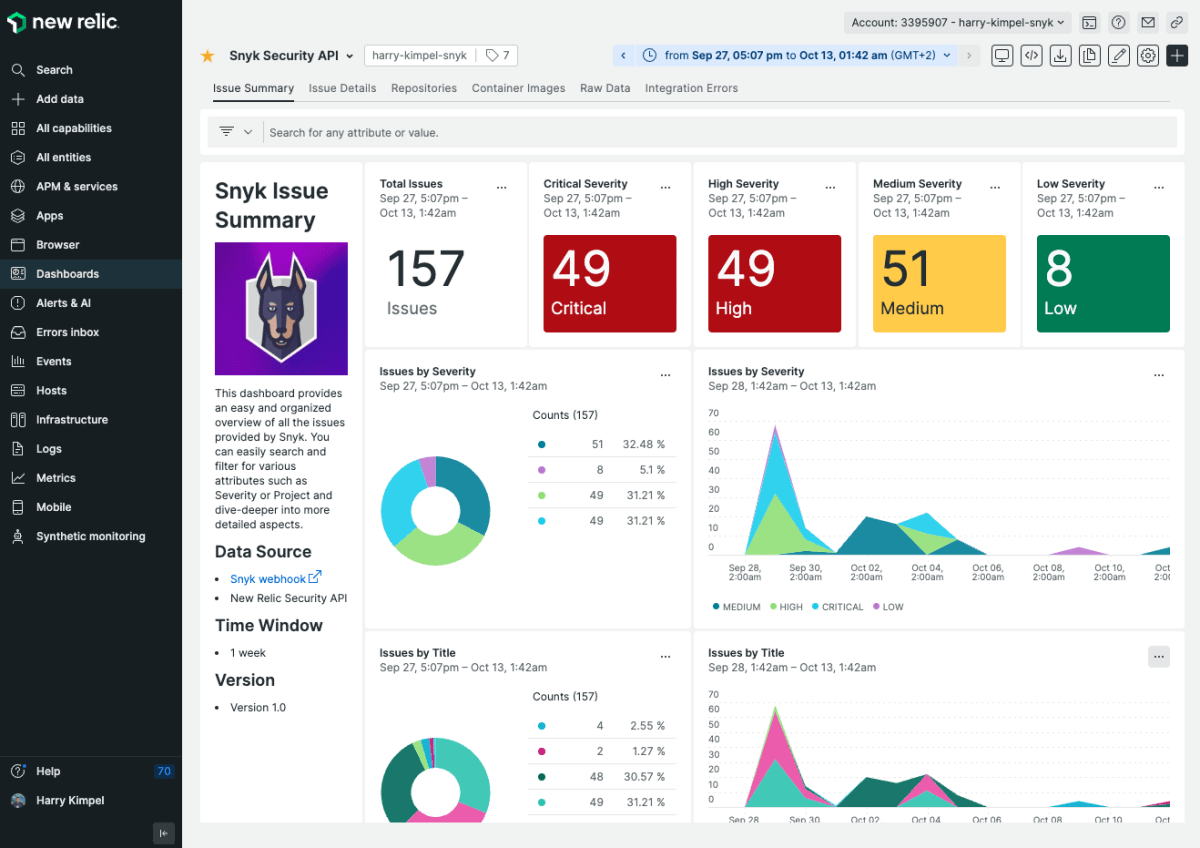

Automate vulnerability scanning

Vulnerability scanning tools like Snyk periodically scan your repository to detect libraries and code with potential vulnerabilities. They also provide recommendations on how to fix these vulnerabilities. We integrated Snyk with Bitbucket to get vulnerability notifications and even pull requests with updated versions of the libraries.

Some of our services use Docker images uploaded to Amazon Elastic Container Registry. So, we implemented ECR image scanning as one of the Security Hub’s recommendations. It now provides additional reports about container package vulnerabilities, including open-source packages of third-party services.

This allows you to make educated decisions about using specific packages or updating their version.

Source: New Relic

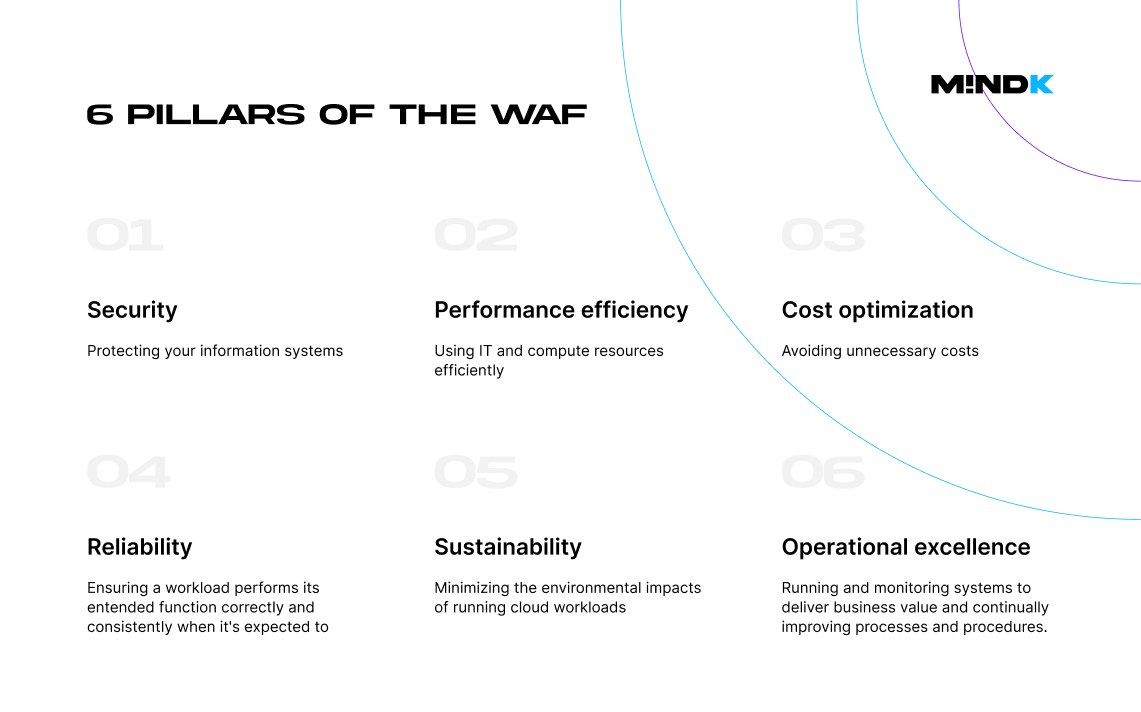

Adopt the AWS Well-Architected Framework

The AWS Well-Architected Framework (WAFR) is a set of Amazon’s guidelines and best practices. It goes beyond strictly technical recommendations on how to become HIPAA-compliant. WAFR helps engineers keep eyes on the bigger picture – organizational processes, communication, and team management.

A great place to start is to familiarize your team with the key pillars of the AWS Well-Architected Framework. Together, these recommendations help you build reliable, secure, efficient, and cost-effective systems in the cloud.

Wrapping up HIPAA compliance for startups

Compliance often seems like a costly distraction for early-stage startups. Yet, the longer you wait, the more difficult and expensive it becomes.

It requires adding new resources, which may be NON_COMPLIANT by default. Infrastructure changes might break existing environments, so you’ll have to retest all features that might be affected by the changes.

The best thing you can do is to start writing your IaC with security and HIPAA in mind. Run AWS Config and Security Hub audits as early as possible. Then, fix things iteratively. Create disaster recovery plans and discuss with business stakeholders how to act in case of audits and various problems.

I hope you now understand the basics of HIPAA compliance for startups. Feel free to contact us if you have any questions or need assistance with your project.