HIPAA is a complex topic. Technical implementation has many nuances, which hackers exploit and regulators punish. In February 2024, hackers broke into the United Healthcare CHC server, compromising the data of 110 million Americans. The alarming part isn’t the human cost, the sheer scale of the breech or eye-watering $2.45B of losses for United Healthcare.

The insurance company neglected the most basic rules of HIPAA-compliant software development. Hackers got their hands on emails that had no encryption and logged into a server that had no two-factor authentication. Once the door was open, they infiltrated the systems using more sophisticated methods.

What they stole contained some of the most sensitive information for any person – medical diagnoses, test results, Social Security numbers, and even physical addresses. With poor incident response, the company had to pay a $22M ransom.

This story is sadly all too common in the healthcare industry. As a CTO at a medical software company, I recently talked to what you may consider a well-established industry player. At first, their product was only tangentially related to healthcare. The focus was on time-to-market and basic security. When the company realized the need for HIPAA certification, the initial audit showed a ~40% compliance score.

In this article, I’ll provide a simple explanation of its concepts and cover all the key aspects of HIPAA compliance for software development.

It’s a great entry point for product managers and startup founders. For a more technical explanation of specific challenges and solutions, I also recommend checking our guides on HIPAA compliance automation for startups and legacy software.

What is HIPAA: making sense of the jargon

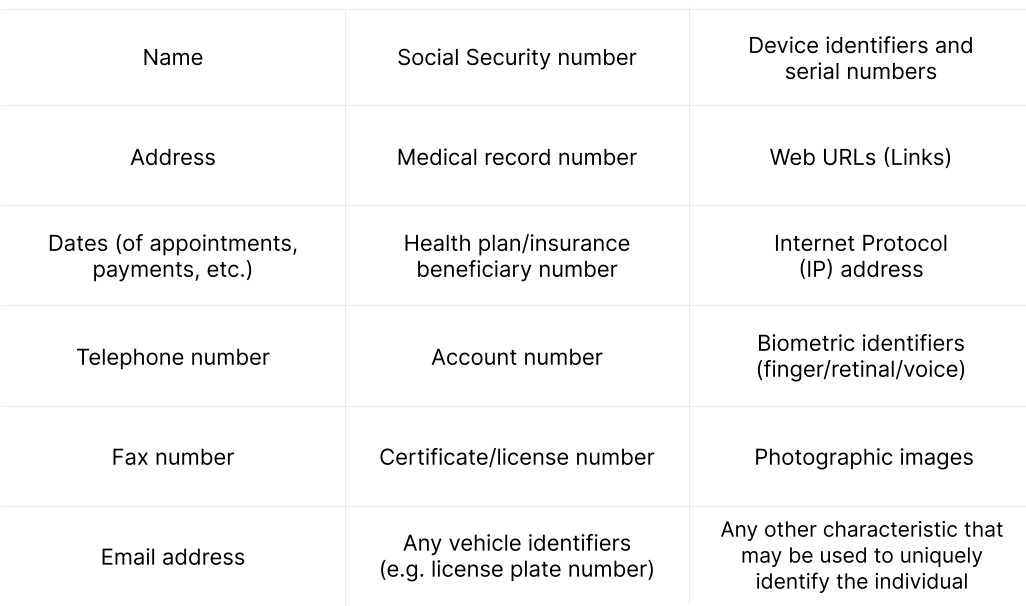

The Health Insurance Portability and Accountability Act (HIPAA) was enacted by the US Congress in 1996. Its primary goal is to protect the privacy and security of Protected Health Information (PHI). PHI includes any data that can be used to identify a patient.

PHI = health information + personal identifiers.

- Health information includes a patient’s medical history, diagnosis, treatment plan, insurance details, payment information, and so on.

- Personal identifiers are names, addresses, birthdates, social security numbers, photos, and all information that can be used to identify a specific patient.

The fact that an individual has received medical services is a PHI in itself.

What is considered PHI? The full list.

Covered Entities is another key term in HIPAA compliance. It includes all organizations and individuals offering healthcare services and operations or accepting payments for them.

- All healthcare providers (hospitals, doctors, dentists, psychologists)

- Health plans (insurance providers, HMOs, and government programs like Medicare and Medicaid)

- Clearinghouses (organizations that act as middlemen between the healthcare providers and insurance companies)

Business Associates is our last and the most important term for HIPAA-compliant software development.

This category includes developers of healthcare apps, hosting/data storage providers, email services, and pretty much every medical startup in the USA. According to HIPAA, you must sign a Business Associate Agreement (BAA) with each party accessing PHI in your system. Deciding not to sign a BAA doesn’t free you from the requirements of HIPAA-compliant development

Who needs to comply with HIPAA?

If your application collects, stores, or transmits PHI to the covered entities, you must comply with the HIPAA Security Rule. This means implementing administrative, physical, and technical safeguards to keep that data confidential, intact, and available only to authorized users.

As a result, whether you need HIPAA-compliant app development boils down to two questions:

- What type of data will your app use, share, and store?

- What type of entity will use it?

Dealing with sensitive health information? Do your users include covered entities like a hospital or health insurer? You must become HIPAA compliant. If your app doesn’t handle such data or doesn’t have covered entity users, don’t worry about compliance.

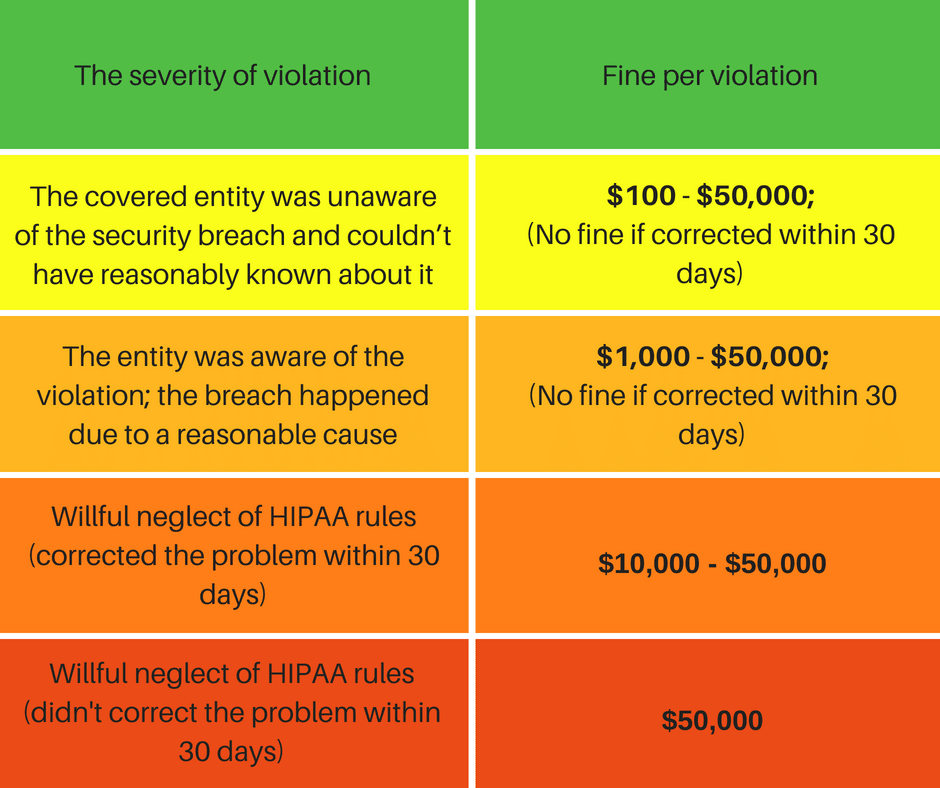

HIPAA fines according to the degree of negligence

Who doesn’t need HIPAA-compliant development?

Some types of healthcare applications may be excluded from HIPAA. The specific requirements are that they don’t deal with sensitive health information and are not used by a covered entity:

- Nutrition tracking or diet apps.

- Personal health or mental health tracking

- Fitness or exercise apps.

So, if your app only focuses on workouts or calculates body mass index without collecting patient data, you’re in the clear.

How to become HIPAA-compliant?

The regulation refers both to healthcare organizations and startups that build medical software.

Both require regular evaluation of their technical and non-technical efforts to protect PHI. Use the HHS audit protocol to assess your HIPAA compliance or hire an independent auditor such as HITRUST. Just remember that OCR doesn’t recognize any third-party certificates.

HIPAA compliance for software development, in particular, requires an audit of safeguards outlined in the Security Rule:

- Technical Safeguards include security measures like login, encryption, emergency access, and activity logs.

- Physical Safeguards secure the facilities and devices that store PHI (servers, data centers, PCs, laptops, etc.). With modern cloud-based solutions, this rule mostly applies to HIPAA-compliant hosting.

Now, let’s proceed to HIPAA compliance for software vendors specifically.

9 steps in HIPAA-compliant software development

The law doesn’t specify any technologies for use in protecting PHI. However, the industry has devised a set of best practices, which I included in this HIPAA compliance checklist for software development.

Secure application & infrastructure design

As a rule of thumb, it’s much easier and cheaper to start a project with HIPAA in mind.

Serverless architecture, infrastructure as code, and secure cloud platforms are your bread and butter.

As a certified AWS partner, MindK recommends Amazon’s guidelines for serverless architecture. This is a great starting point for your HIPAA-compliant development efforts. Another good step at this stage is to set up your company’s wonderfully named AWS Managed Services management account ahead of time.

For larger projects, it’s better to have a separate sub-account for every environment. This makes it easier to manage access rights, change things rapidly, and isolate cloud resources and data. AWS Control Tower is a great tool for automating account management at scale.

For technical HIPAA audits, AWS Config and AWS Security Hub are among the best tools. They are a part of the AWS ecosystem. They don’t require costly subscriptions. Moreover, starting an audit takes just a few buttons in the AWS console.

Fixing the NON_COMPLIANT issues later on, however, may not be so trivial.

For example, S3 buckets, AWS Backup service, KMS customer-managed keys, bastion instance access rights, security group rules, and logging were some of the most challenging fixes our Lead DevOps Engineer described in his in-depth guide on HIPAA compliance for medical startups. He focused on technical recommendations like overcoming the growing quantity of Terraform state resources with a modular Don’t Repeat Yourself (DRY) approach.

My article, on the other hand, is a less technical HIPAA compliance checklist for software development.

Access control

According to the HIPAA Privacy Rule, nobody should access more patient information than that required to do their job. The rule also specifies de-identification, patient’s rights to view their own data, and their ability to give or restrict access to their PHI. This is where data access control comes into play.

Role-based access control restricts the rights based on a user’s job, responsibilities, and role within a company. For instance, a nurse might only access the records of patients they are currently treating. Doctors might edit a wider range of patient records based on their specialty.

This helps keep patient data secure and accessible only to those who really need it.

Attribute-based control restricts access based on attributes associated with the user, the data, and the context of the access request. These attributes can be a person’s clearance level, their current location, sensitivity level and format, and even the time of the day. By combining attributes, you can control access more precisely compared to the role-based approach.

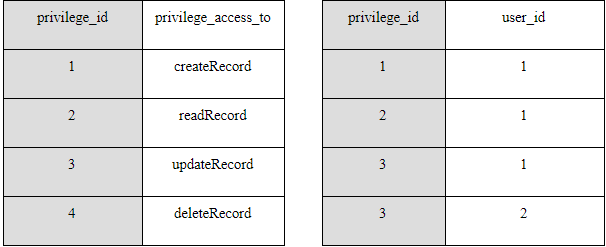

User-based access control focuses on the user identity. One way to accomplish this is to assign each user a unique ID. This allows identification and tracking the activity of people in the system. Next, give each user a list of privileges to view or modify certain information. You can regulate access to individual database entities and URLs.

In its simplest form, user-based access control consists of two database tables. One table contains the list of all privileges and their IDs. The second table assigns these privileges to individual users. In this example, the physician (user ID 1) can create, view, and update medical records, while the radiologist (user ID 2) can only update them.

Which access control is better to use? It all depends on your business needs. Sometimes, just one type of access control is enough to cover all your bases. Other times, you might need to mix and match.

For example, when developing the first EMR system for lactologists, role-based access control was enough to meet the initial requirements. Its 2.0 version uses a permission-based control with attribute-based filtration on top, just before the response is sent to the front end.

Another application I designed has a multi-tenant architecture. The client needed granular access rights for users in different organizations. We ended up using multi-role-permission access control. This means that a user’s access rights are determined by the permissions associated with all their roles in the system.

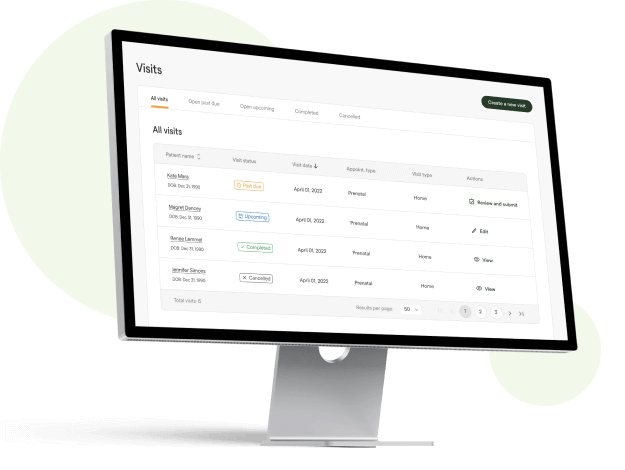

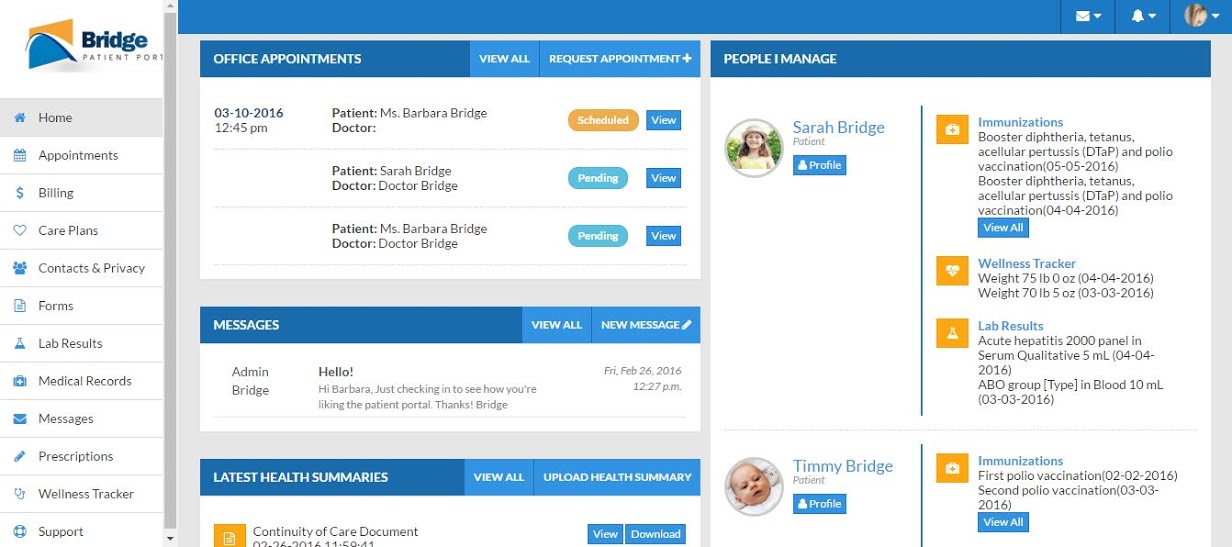

A custom EMR for lactation practice, developed by MindK

Person or entity authentication

After you’ve assigned privileges, your system should be able to verify that the person trying to access PHI is the one he or she claims to be. The law offers several general ways to implement this safeguard:

- Biometrics (e.g. a fingerprint, a voice or face ID).

- Strong password.

- Physical means of identification (e.g. a key, card, or a token).

- Personal Identification Number (PIN).

Passwords are the most common authentication method. According to Verizon, it is also responsible for 63% of data breaches. Enforcing stronger passwords is essential. They should have at least 8 characters, capital and lower case letters, numbers and special characters, no common combinations, or username variations.

There is no such thing as too much security. Source: mailbox.org

Forcing users to frequently change passwords can actually harm your security efforts. People often come up with unoriginal combinations that are easy to crack (eg. password ⇒ pa$$word).

Here are better options for HIPAA-compliant software development:

Multi-factor authentication. MFA combines a secure password with a second method of verification. This can be anything from a biometric scanner to a one-time SMS code or an authentication app. HIPAA does not specify MFA rules. However, the National Institute of Standards and Technology (NIST) recommends MFA as a key step to enhance security. The idea is that even if one factor is compromised, the attacker won’t be able to access the system without the other factor(s).

Biometric authentication is based on unique characteristics, such as fingerprints, iris, and/or facial patterns. These biological features cannot be easily duplicated or shared. This makes biometrics an increasingly popular feature in HIPAA-compliant web development.

Transmission security

If United Healthcare had had either of these features, the hackers would’ve failed to expose the data of 110 million people. But secure authentication isn’t enough.

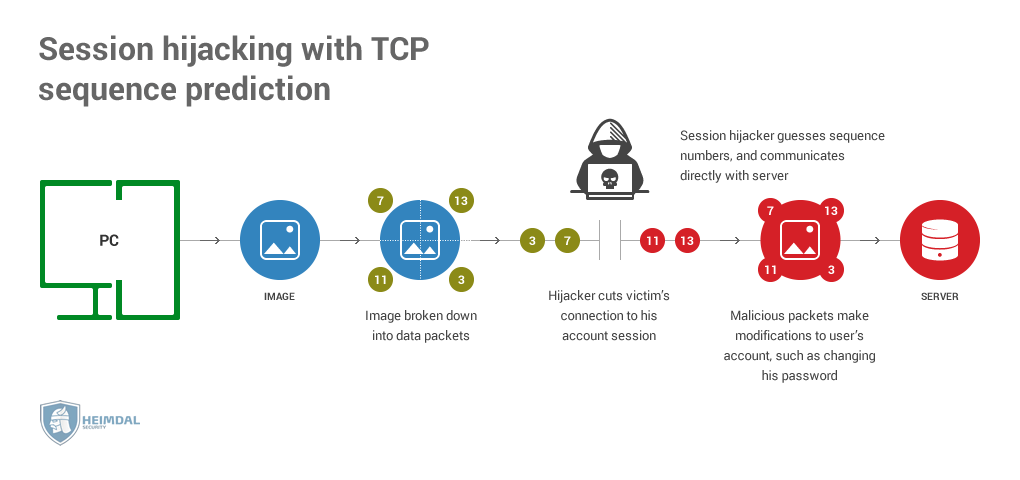

Some attackers might get between the user’s device and your servers. This way, the hackers could access the PHI without compromising the account. This is known as session hijacking, a kind of man-in-the-middle attack.

One of the possible ways to hijack a session. Source: Heimdal Security

This is one of the reasons why the HIPAA Security Rule requires encryption of the PHI sent over the network or and between the different tiers of the system with:

- HTTPS encrypts communication between a web browser and a server. Thus, any sensitive data transmitted between the two is protected from prying eyes.

- SSH provides encryption and authentication of data transmission between the client and server. It also allows secure remote access and file transfer.

- TLS and SSL encrypt the data sent between two endpoints. Without decryption keys, PHI looks like a meaningless string of characters.

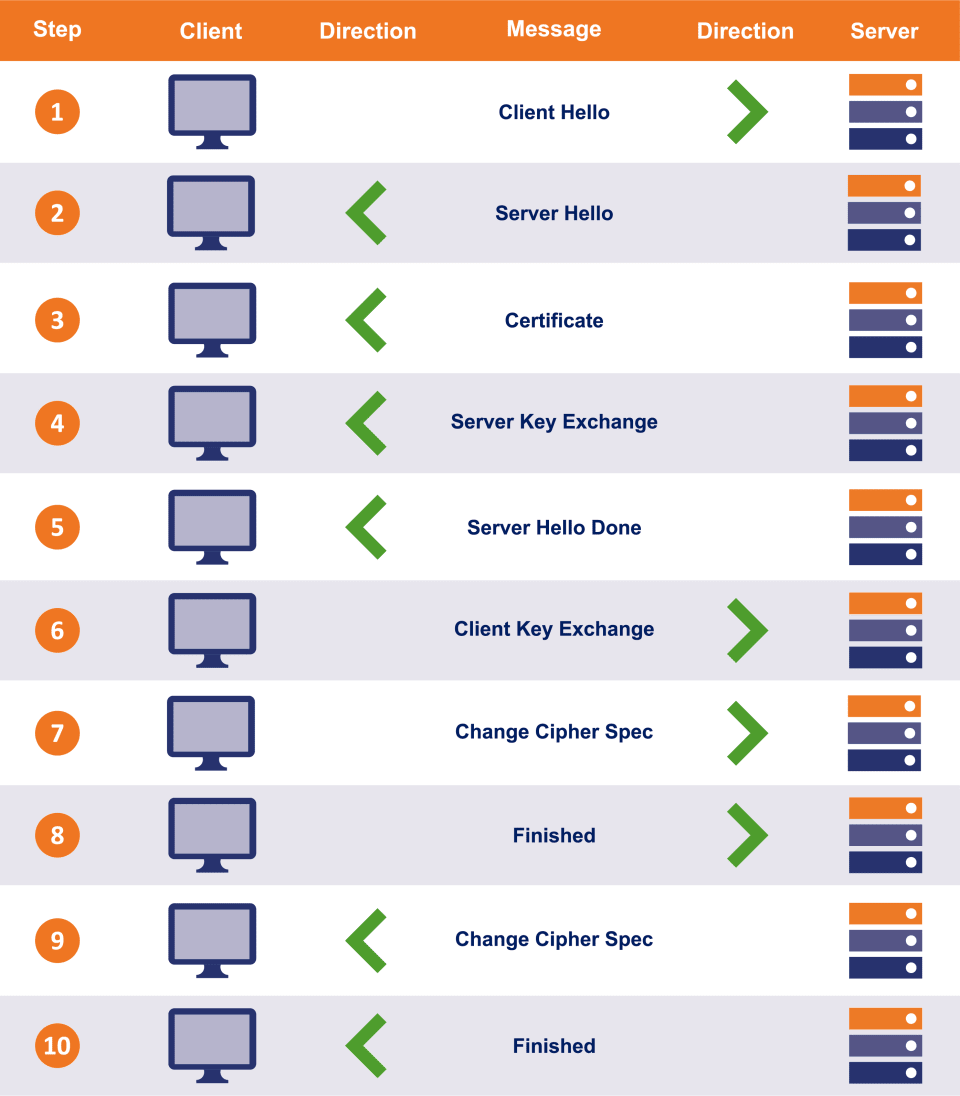

A file called SSL certificate ties the key to your digital identity. When establishing an HTTP connection with your application, the browser requests your certificate. The client then checks its credibility and initiates the so-called SSL handshake.

The result is an encrypted communication channel between the user and your app.

SSL handshake; source: the SSL store

To enable HTTPS for your app, get an SSL certificate from one of the trusted providers and properly install it.

Another requirement is to use a secure SSH or FTPS protocol to send the files containing PHI instead of the regular FTP.

Finally, email isn’t a secure way to send PHI. Popular services like Gmail don’t provide the necessary protection. Emails with PHI require encryption if sent beyond the firewalled server. There are many encrypted email services and HIPAA-compliant APIs for secure messaging.

You should also implement policies that limit what information that can be shared via emails.

Secure API integrations

Data transmissions via third-party integrations require extra attention to security and privacy. HIPAA-compliant development requires you to be very careful about evaluating, selecting, and setting up third-party integrations. Here’s what we take into account at MindK:

- Security: The integration should use secure protocols like HTTPS and SSH for data transmission, and implement strong authentication and authorization measures.

- Compliance: The integration should comply with HIPAA and other relevant regulations, and have a clear data handling and privacy policy.

- Data ownership: Our client, a healthcare organization, should retain ownership of their data, and have the ability to control access.

- Data quality: The integration should provide accurate and reliable data that can be easily integrated into the healthcare organization’s systems.

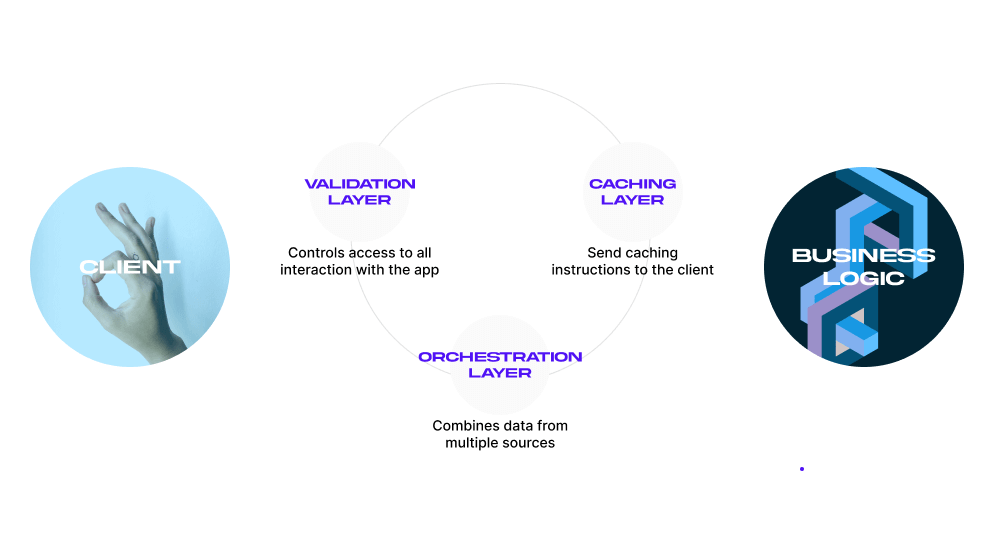

MindK guide to secure API development

Backup and storage encryption

Backups are essential for data integrity. A database corruption or a server crash could easily damage your PHI. So does a fire or earthquake in a data center. That’s why it’s important to have multiple copies of PHI stored in different locations.

The HHS Office for Civil Rights (OCR) makes it clear – if you store PHI on a server or shared drive, use backup and storage encryption. No ifs, ands, or buts about it.

Backup refers to creating a duplicate copy of data that can be restored in the event of data loss or corruption. Storage encryption, on the other hand, involves converting data into an unreadable format that can only be accessed with a decryption key.

And here’s the best part: using both backup and storage encryption creates multi-layered protection of patient data. This is known as data-at-rest protection.

You can implement backup and storage encryption on the side of both the cloud provider and the healthcare organization. As I mentioned before, we recommend AWS with its built-in storage and backup services:

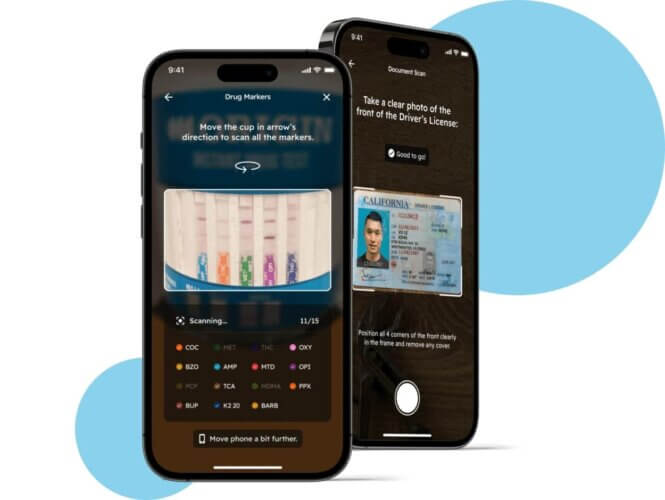

These methods can encrypt data at rest and in transit. However, you still need to configure and manage encryption appropriately. Replication with encryption, event logging, access logging, and other S3 bucket settings can be a tough challenge to solve. Using customer-managed KMS keys instead of the less secure default option is another hurdle our DevOps team had to solve for an AI-powered health testing app.

Healthcare organizations can also handle all backup and storage encryption on a dedicated server. But remember, this comes with added responsibility!

A backup is useless if you can’t restore it. HIPAA-compliant web development involves regular testing for recovery failures. So, log the downtime and any failures to back up the PHI. The backups themselves must also comply with HIPAA security standards.

AI-powered healthcare app built by MindK

PHI disposal

You should permanently destroy PHI when no longer needed. As long as its copy remains in one of your backups, the data isn’t considered “disposed of”.

PHI can hide in many unexpected places: photocopiers, scanners, biomedical equipment (e.g. MRI or ultrasound machines), portable devices (e.g. laptops), old floppy disks, USB flash drives, DVDs/CDs, memory cards, flash memory in motherboards and network cards, ROM/RAM memory, etc.

All the way in 2010, Affinity Health Plan returned its photocopiers to the leasing company. It didn’t, however, erase their hard drives. The resulting breach exposed the personal information of more than 344,000 patients. Affinity had to pay $1.2 million for this little incident.

Audit controls

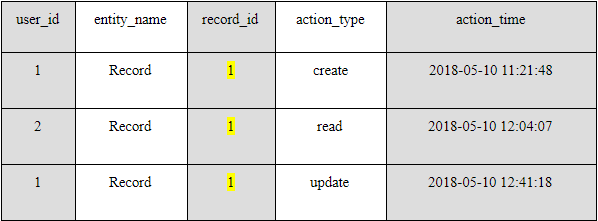

HIPAA-compliant app development requires you to keep an Activity Audit Log (AAL). Monitor what is done to the PHI stored in your system. Record each time a user logs in and out. You should know the who, when and where sensitive data was accessed, updated, modified or deleted.

Monitoring can be done via software, hardware, or procedural means. A simple solution would be to use a table in a database or a log file to record all the interactions with the patient information.

The table should consist of five columns:

- user_id. The unique identifier of the user who interacted with PHI;

- entity_name. The entity that the user has interacted with (An entity is a representation of some real-world concept in your database e.g. a health record);

- record_id. The entity’s identifier;

- action_type. The interaction’s nature (create, read, update, or delete); and

- action_time. The precise time of interaction.

In this example, a physician (user_id 1) has created a patient’s record, a radiologist has viewed it, and later the same physician has altered the record.

HIPAA compliance for SaaS companies and other covered entities requires maintaining an activity audit log for at least six years from the date of its creation or the date when it was last in effect, whichever is later.

The absence of audit controls could lead to higher fines. But just having a log isn’t enough! Make sure to encrypt and store activity audit logs in a secure location, back them up, and limit access to authorized personnel only.

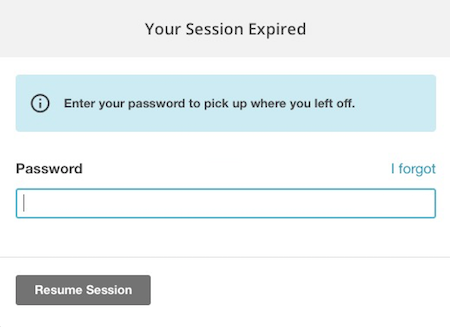

Automatic logoff

A system with PHI should automatically terminate any session after a set period of inactivity. To continue, the user must re-enter his/her password or authorize another way. This protects PHI if somebody loses the device while logged into your app.

The exact period of inactivity triggering the logout depends on the use case.

With a secure workstation in a highly protected environment, you can set the timer for 10-15 minutes. For web-based solutions, this period shouldn’t exceed 10 minutes. And for a mobile app, set the timeout for 2-3 minutes.

Source: Mailchimp

Extra requirements for HIPAA-compliant mobile app development

Mobile devices present many additional risks. A smartphone could easily be stolen or lost in a high-traffic area, compromising sensitive info. To prevent this, use:

- Screen lock (Android/iOS);

- Full-device encryption (Android/iOS); and

- Remote data erasure (Android/iOs).

You can’t force users to use these features, but you can encourage them to do so. HIPAA-compliant app development requires user onboarding, helpful tooltips, and educational emails/push notifications. Users can also store PHI in a secure container separately from the personal data. This way, they can remotely erase the health information without affecting anything else.

Many physicians use personal smartphones to send health information. You can neutralize this threat with secure messaging integrations or encrypted password-protected health portals for patients and doctors.

Push notifications aren’t secure by default, as they can appear on a locked screen. One solution is to send notifications without PHI. The same applies to SMS and any automatic messages.

Source: Bridge Patient Portal

HIPAA-compliant app development also requires secure coding practices. These are the minimum requirements we use and recommend at MindK:

- Secure development environments. Our engineers use a secure development environment to support secure coding practices, code reviews, and security testing tools.

- Strong password storage and management. Passwords should be stored using industry-standard encryption techniques, such as hashing and salting. They should never be freely available.

- Internal access controls. Access to sensitive data and systems should be restricted to authorized personnel only. Our developers must implement access controls and logging mechanisms to track and monitor data access.

- Secure coding libraries and frameworks. We use secure coding libraries and frameworks, designed to prevent common security vulnerabilities.

MindK has been named one of the top Healthcare software development companies according to DesignRush B2B marketplace

GDPR and other healthcare data security standards

In addition to learning about HIPAA compliance for software development, you might want to consider other relevant regulations. FDA, for example, may classify some mHealth apps as medical devices if they influence the decision-making process of healthcare staff.

The section below contains a list of such regulations and an interactive tool to help you navigate beyond HIPAA compliance for software vendors.

GDPR (General Data Protection Regulation). GDPR applies to all industries in the EU, including healthcare. Organizations that collect and process personal health data from individuals located in the EU must comply with GDPR requirements. They include obtaining explicit consent, implementing appropriate technical data protection measures, and promptly reporting data breaches to authorities and affected individuals.

If your software handles the data of even a single EU resident, the 15-step guide to GDPR compliance is an essential resource.

HITRUST Common Security Framework (CSF) is a comprehensive security and privacy framework with a set of controls for protecting sensitive healthcare data. Besides HIPAA requirements, it includes other relevant security and privacy regulations, industry standards, and best practices. Many healthcare organizations choose to adopt the HITRUST CSF to demonstrate compliance with multiple regulatory requirements and enhance the overall security posture.

SO 27799 is a collection of best practices designed specifically for handling health data.

ISO 27001 is a standard that specifies the requirements for information security management systems.

HITECH Act (Health Information Technology for Economic and Clinical Health) provides funding for implementing electronic health records. It also promotes the adoption of healthcare technology and strengthens HIPAA’s privacy and security protections.

The cost of HIPAA compliance in application development

Healthcare apps range from minimalistic symptom trackers to massive EMR systems. Their cost varies greatly depending on the number of features, complexity, technologies, team composition, and other factors detailed in the guide to web application development cost.

In my experience, a minimum lovable product (MLP) typically requires 2,000 – 2,500 hours of development time. Depending on the team, this adds up to $80,000 – $250,000 of expenses.

You then need to add features and activities specific to HIPAA-compliant software development. Here’s the napkin math.

| Feature/Activity | Description | Time (hours) | Cost (USD) |

| User Authentication and Access Control | Multi-factor authentication (MFA), role-based access control (RBAC), and unique user IDs to restrict access to ePHI. | 120-160 | $4,800-$16,000 |

| Data Encryption | AES-256 encryption for data at rest, TLS 1.2 or higher for data in transit. Includes encryption key management. | 80-120 | $3,200-$12,000 |

| Audit Logging and Monitoring | System logs to track access to ePHI, automated alerts for suspicious activity, secure storage for logs. | 120-160 | $4,800-$16,000 |

| Data Integrity Checks | Mechanisms like hashing and checksum verifications to ensure data integrity. | 40-60 | $1,600-$6,000 |

| Data Anonymization and Masking | Mask sensitive data in records where it’s not required, allowing minimal disclosure. Also, anonymize data for analytics. | 80-120 | $3,200-$12,000 |

| Emergency Access Procedures | Secure access paths for emergency situations while maintaining compliance (break-glass protocols). | 40-60 | $1,600-$6,000 |

| Backup and Recovery System | Automated, encrypted backups of ePHI with recovery procedures to maintain availability. | 120-160 | $4,800-16,000 |

| Transmission (API) Security | Secure APIs using OAuth 2.0, token-based authentication, and secure API gateways. | 160-200 | $6,400-$20,000 |

| HIPAA Training and Compliance Documentation | Training materials for developers and staff on HIPAA compliance, documentation of compliance processes, and risk assessments. | 80-100 | $3,200-$10,000 |

| Total | 840-1140 hours | $35,000-115,000 |

Thus, HIPAA compliance for software vendors costs $115,000–$365,000 in total.

Many cost-saving techniques can decrease this price tag. One such example is using API integrations and low-code SaaS development. Another example is our proprietary AI framework that can save as much as 30% of time and budget for HIPAA-compliant app development.

Conclusion

The recent United Healthcare hack is yet another proof of just how important and criminally overlooked security is in the industry.

From my own experience, the earlier you consider HIPAA requirements, the less time and money you’ll waste fixing all issues. And I’m not even talking about the fines, the human cost, and the loss of reputation enough to bury a fledgling startup.

To prevent this, you need to learn the key terms, features, and steps involved in HIPAA compliance application development. If you want to explore technical aspects focused on AWS and infrastructure as code, check our guides for healthcare startup– and legacy SaaS HIPAA compliance.

Beyond implementing these safeguards, you need to convince an auditor that you’ve done enough to protect customer data. This means providing specifications for each release, security testing plans, and their results.

We’ve made this an integral part of software development at MindK. So, if you need assistance for your project, just drop me a line.